The Six Principles of Responsible AI According to Microsoft and GitHub

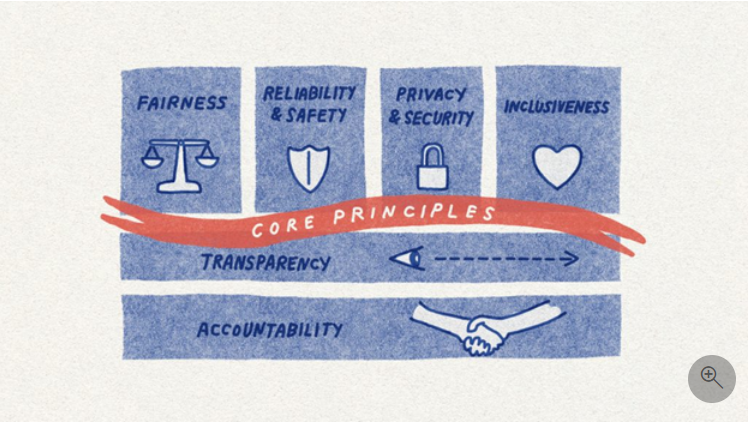

Microsoft is a global leader in responsible AI, having identified six key principles that should guide the development and use of AI. These principles are:

1. Fairness

AI systems must treat all individuals fairly.

They should avoid disparate impacts on similar groups. For example, in medical, financial, or professional contexts, AI should provide consistent recommendations to individuals with comparable profiles.

Techniques used to detect bias and reduce unfair impacts include:

- Reviewing training data

- Testing with balanced demographic samples

- Adversarial debiasing

- Monitoring model performance across user segments

- Mechanisms to correct unfair scores

Training models on diverse and balanced data promotes fairness.

2. Reliability and Safety

AI systems must operate reliably, safely, and consistently.

They should:

- Respond securely to unexpected conditions

- Resist malicious manipulation

- Reduce risks of physical, emotional, or financial harm

A reliable system is robust, accurate, and predictable under normal conditions.

3. Privacy and Security

AI systems must be secure and respect privacy.

Microsoft and GitHub integrate these principles into their responsible AI strategy.

Key measures include:

- Obtaining explicit user consent before collecting data

- Collecting only necessary data

- Anonymizing personal data (pseudonymization, aggregation)

- Encrypting sensitive data in transit and at rest

Tools used:

- Hardware Security Modules (HSMs)

- Secure vaults like Azure Key Vault

- Envelope encryption (dual key encryption)

Access to data and models must be strictly controlled, audited, and limited.

4. Inclusiveness

AI systems must be accessible to all and promote inclusion.

This means:

- Functioning for diverse users

- Being usable by everyone, regardless of ability

- Being available in all regions, including developing countries

- Incorporating feedback from diverse communities

Examples of inclusive AI:

- Facial recognition adapted to all skin tones, ages, and genders

- Interfaces compatible with screen readers

- Translation of regional dialects

- Diverse design teams

Inclusive solutions include:

- Voice commands, subtitles, screen readers

- Linguistic and cultural adaptation

- Offline or low-connectivity functionality

5. Transparency

AI systems must be understandable and interpretable.

Designers should:

- Clearly explain how systems work

- Justify design choices

- Be honest about AI capabilities and limitations

- Enable auditing through logs and reports

Transparency tools include:

- Documentation of data and models

- Explainable interfaces

- Testing dashboards

- AI debugging tools

Transparency strengthens trust, safety, fairness, and inclusion.

6. Accountability

People must be responsible for the operation of AI systems.

This involves:

- Continuous performance monitoring

- Proactive risk management

Microsoft considers accountability a foundational principle. Organizations must take responsibility for the systems they deploy.